Experimenting with AI in Design

Peerview

PeerView is an experimental interview feedback platform where we used AI tools not only as part of the product vision, but also as collaborators in the design process itself. Developed in the AI in Design course in Northwestern’s EDI master’s program, the project became a sandbox to test how AI could help with everything from stakeholder mapping and copy exploration to interface concepts, while we stayed accountable for the final decisions and ethics behind them.

Date

10/2025 - 12/2025

Location

Evanston, IL

Role

UX Designer, PM

Tools

Figma Make, Cursor, Gemini, ChatGPT

The Challenge

In our AI in Design studio in Northwestern’s EDI master’s program, we were asked to use AI to improve “job fit” for first-time job seekers navigating a tough market with limited guidance. We narrowed the problem to a specific pain point: after rejection, job seekers are rarely given constructive feedback on their interview performance, leaving them unsure what to change, how to practice, or where to focus next. At the same time, both candidates and recruiters are experimenting with AI in the hiring process, creating new opportunities for support but also new risks around generic, dehumanized feedback.

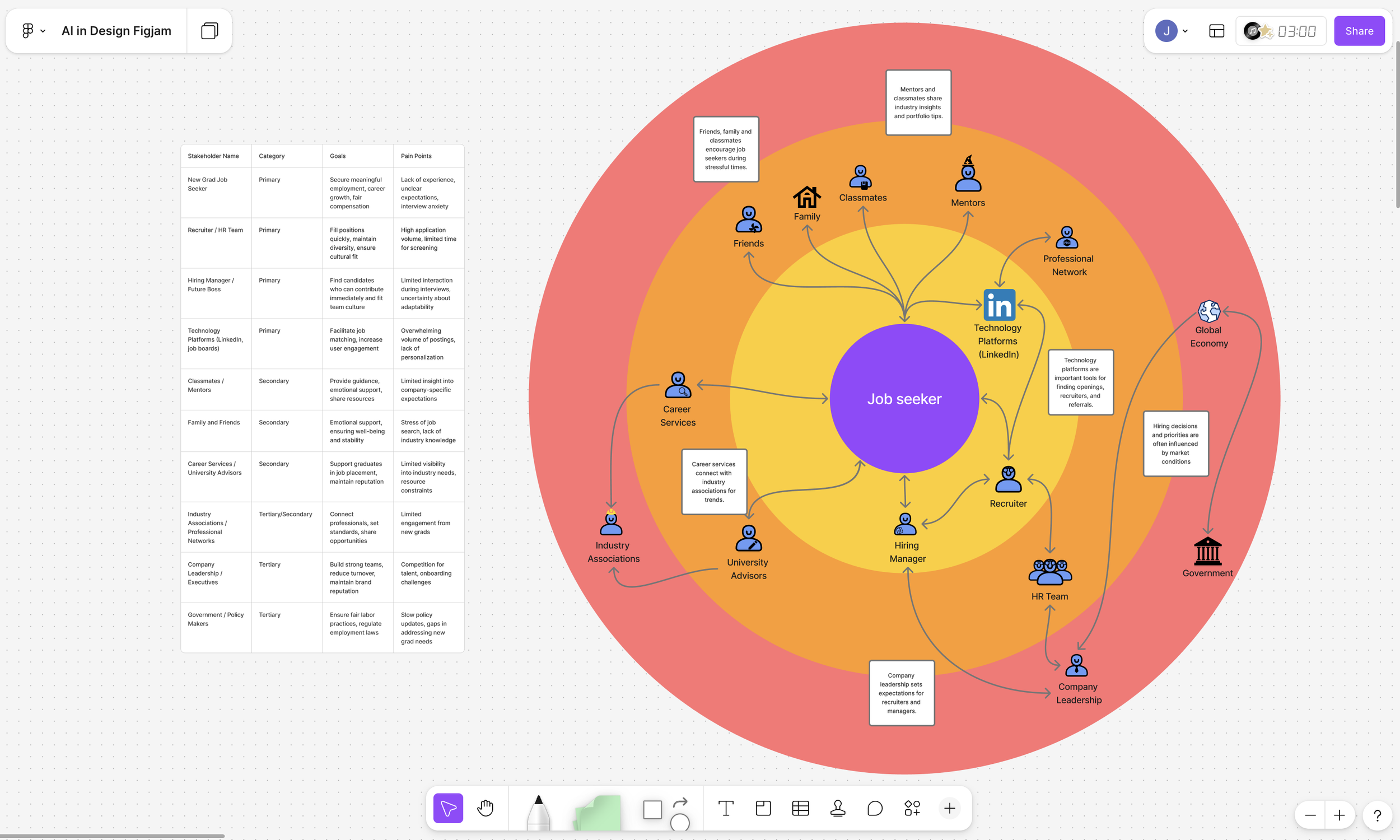

Secondary Research and Ecosystem Mapping

How might we provide timely, useful feedback to candidates after interviews so they learn and stay motivated, even when they do not get an offer?

The Concept

Landing Page for Peerview

Prototype of the Peerview Platform

PeerView explores a mentorship-style platform where job seekers share an interview experience, receive structured feedback from verified mentors, and practice stronger responses for next time. We designed three touchpoints that tell this story end to end. A social campaign that meets people where they already are. A landing page that explains what PeerView is and why it matters. A mentorship interface that shows what feedback and conversation could actually feel like.

Our methodology centered on prototyping three connected parts of the experience using different tools and AI to help us iterate. For marketing, we created social posts in Canva and used ChatGPT to generate multiple headline and caption directions that we refined based on feedback. For the landing page, we used Cursor to build the first draft, then ran user interviews and fed the transcripts into NotebookLM to create concise summaries of what people found confusing or compelling. We shared those summaries and the live page with ChatGPT to get concrete advice and prompts for how to improve the content and structure, then went back into Cursor to implement the changes. For the platform itself, we designed the mentor feedback interface in Figma and Figma Make, using AI to explore different ways to structure feedback and draft example scripts before rewriting them to be more specific, human, and actionable. Across all three parts, AI gave us breadth of options, and critique and team judgment drove which versions we kept or changed.

Marketing material created using with Canva AI

Reflection and Stance on AI

Working on this project and in the AI in Design course made me much more intentional about when AI actually belongs in my process. I treat AI like any other tool, it needs to either improve how I work or improve the quality of the final output. If it does not do either, then using it adds ethical and environmental cost without a good reason, and that alone is enough for me to pause.

I use AI most often as a research partner, a synthesis helper, and a way to check for risks and blind spots. Coming from a business and marketing background, I know I am prone to confirmation bias, especially when I am working alone. Inviting AI into the process gives me alternative interpretations of interviews, surfaces patterns I may have missed, and forces me to look at risks that do not fit my original narrative. I still start by building my own structure and point of view, then ask AI to expand, question, or pressure test it rather than generate ideas from scratch.

Over time, I have developed a set of criteria that I apply whenever I reach for these tools. I ask whether my own thinking is still central, whether AI is reducing bias instead of amplifying it, and whether it is introducing any new ethical concerns such as privacy issues or misrepresentation. I also pay attention to places where AI makes things look more polished than they really are, especially in prototypes, since that can distract users from the core idea we are trying to test. Going forward, I want to be transparent about where AI shows up in my work and hold it to the same standard as any other design material. It should make the work more thoughtful, more rigorous, and more grounded in reality, not just faster.

The writing and illustrations on this page are created using the help of ChatGPT and Gemini Nano Banana